Solution #1

This is the most recent solution, download all the models you need yourself. The good thing about this solution is you maintain control over the location of the model you are using and makes it easier to deploy in environment where you will not have the ability to have internet access. The steps for this are fairly simple and Adam Hughes shared his solution.

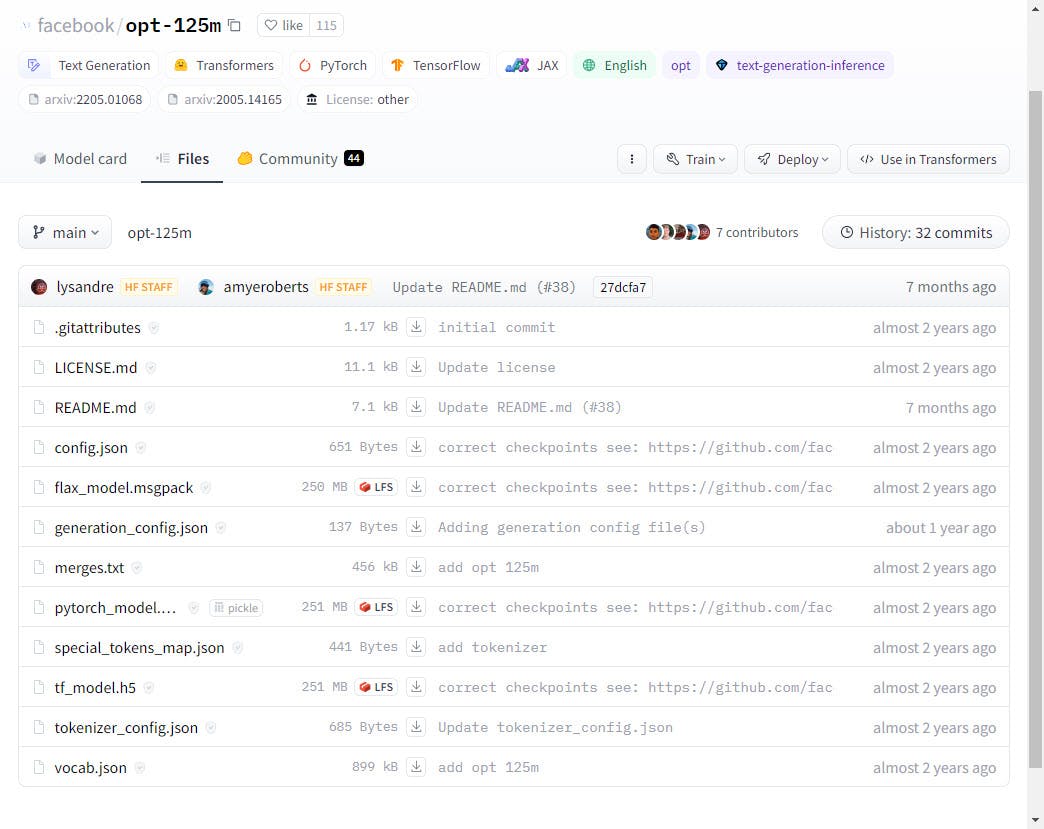

Choose a model to use

Download all the model files and put them in a folder local to your project, in this case it would be /models/facebook/opt-125

Pass the full path to the model transformer when using it as a parameter

Instead of --model facebook/opt-125 we do --model /path/to/facebook/opt-125

Thanks to Adam Hughes we have another solution for working with our Huggingface models.

Extra: Docker!

There are two options for using the local models within Docker, the first option copies the models into your Docker image, and the second option is to use Docker Compose and use it as a service.

Option 1

Now if you are using Docker, as most engineers and scientist using these models, we need to make sure you copy the models to your image.

COPY /path/to/models /models

Then you can run the docker container with the model as a reference as shown below.

docker run ... --model /mnt/models/facebook/opt-125m

Option 2

Alternatively you can use docker-compose to mount the folder as a docker volume as shown below.

version: '3.8'

services:

local:

build: .

# entrypoint is optional way to run the python application

# with its model as reference for the app.py

entrypoint: python3 -m app.py

command: --model /mnt/models/facebook/opt-125m

volumes:

- ./models:/mnt/models

ports:

- "8000:8000"

Solution #2

This solution might not work anymore, but it had been working until recently in March, 2024.

With LLMs and AI models being used on everything Python by everyone, a little issues comes up. For Python development you should always have a virtual environment, if you don't have one do the following in Windows.

PS D:\projects\huggingface> python -m venv .venv

PS D:\projects\huggingface> .\.venv\Scripts\activate

After executing the previous commands you will have a folder .venv containing all of your Python libraries.

Now that you have your virtual environment setup, your libraries installed, the next step is to have your code run and download the models it needs from huggingface.co. However, it is not downloading and you get the error below.

requests.exceptions.SSLError: (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /sentence-transformers/all-MiniLM-L6-v2/resolve/main/tokenizer_config.json (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:997)')))"), '(Request ID: 3f85ee44-40d4-4e77-9e4f-4adb32847e54)')

What is the issue? Well the SSL on hugginface.co seems to be invalidated and you cannot connect to it. Now if you conscious about using Python, you probably already have a virtual environment.

Open the file Lib\site-packages\requests\sessions.py from your virtual environment.

Go to line 777 and comment out the following:

verify = False #merge_setting

There you have it, now you should be able to download those models!

Conclusion

There you have it, two solutions to help ease the pain of working with these models. The first solution downloads the models, and the second solution bypasses the SSL issue (although some people have complained that it might not be working anymore).

Reference

https://github.com/huggingface/transformers/issues/17611

https://stackoverflow.com/questions/71692354/facing-ssl-error-with-huggingface-pretrained-models

Prompt used for my cover image: "make an image about hacking a python library for REST requests that is 1600 pixels in width by 840 pixels in height" then told to "remove human hands" and running some filters over it in Photoshop.